How far can we get in developing language models like GPT-3

to let them represent the view of a philosopher?

YOU MAY ENJOY TO WATCH A TALK THAT ENTAILS THE DIGIDAN INSTALLATION:

I am working on the question of what digital replicas are and investigate their relation to their origins. Thereby I asked myself, how far can we get in developing language models like GPT-3 to let them represent the view of a philosopher? | |

WHAT is a GPT-3? A GPT-3 is a neural network trained to predict the next likely word in a sequence. Technically, it is a 175 billion parameter language model that shows strong performances on many Natural Language Processing tasks. The abbreviation stands for Generative Pretrained Transformer!

| |

Fine-tuning the Davinci model of GPT-3 on the corpus of Daniel Dennett in collaboration with Eric Schwitzgebel, Matt Crosby Our research questions: Strasser, A., Crosby, M., Schwitzgebel, E. (2023). How far can we get in creating a digital replica of a philosopher? In R. Hakli, P. Mäkelä, J. Seibt (eds.), Social Robots in Social Institutions. Proceedings of Robophilosophy 2022. Series Frontiers of AI and Its Applications, vol. 366, 371-380. IOS Press, Amsterdam. doi:10.3233/FAIA220637 | This picture illustrates the project: On the left side, you see the complete work of a philosopher. In the middle, you see the GPT-3 we fine-tuned. On the right side, you see the not yet existing manual on how to operate this machine. |

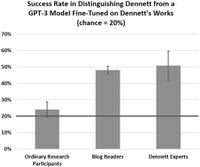

Experiment to evaluate the model in collaboration with Eric & David Schwitzgebel We asked the real Dennett 10 philosophical questions and then posed the same questions to the language model, collecting four responses for each question without cherry-picking. Experts on Dennett's work succeeded at distinguishing the Dennett-generated and machine-generated answers above chance but substantially short of our expectations. Philosophy blog readers performed similarly to the experts, while ordinary research participants were near chance distinguishing GPT-3's responses from those of an “actual human philosopher”. Schwitzgebel, Eric, Schwitzgebel, David, Strasser, Anna (2023). Creating a Large Language Model of a Philosopher. Mind & Language, 1–22. https://doi.org/10.1111/mila.12466 |