Collection of papers of the last months

Every day new articles dealing with LLMs are published. It is almost impossible to be up-to-date all the time. I have started to collect interesting articles in alphabetical order.

But first, a BBC piece on AI sentience:

https://www.bbc.com/reel/video/p0f73vlw/can-artificial-intelligence-ever-be-sentient-

Ananthaswamy, Anil (2023). A new approach to computation reimagines artificial intelligence. |

https://www.quantamagazine.org/print

| enormous vectors with semantic meaning reason more abstractly |

Browning & LeCun (2023). What AI Can Tell Us About Human Intelligence. Noema. |

https://www.noemamag.com/what-ai-can-tell-us-about-intelligence/ | manipulate symbols? |

Burges (2023). The Hacking of ChatGPT Is Just Getting Started. WIRED. |

https://www.wired.com/story/chatgpt-jailbreak-generative-ai-hacking

| jailbreaking LLMs to get around safety rules |

Chiang, T. (2023) ChatGPT Is a Blurry JPEG of the Web. The New Yorker. |

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

| paraphrases instead of quotes |

Davies, Ernest (2022). Comments on the commonsense datasets in the BIG-bench collection. |

https://cs.nyu.edu/~davise/Benchmarks/BigBenchDiscussion.html

|

benchmarks |

Davis, E., Hendler, J., Hsu, W., Leivada, E., Marcus, G., Witbrock, M., Shwartz, V., & Ma, M. (2023). ChatGPT/LLM error tracker. |

https://researchrabbit.typeform.com/llmerrors?typeform-source=garymarcus.substack.com

|

failures |

Dickson, Ben (2023). Why ChatGPT might slow down AGI. |

https://bdtechtalks.substack.com/p/why-chatgpt-might-slow-down-agi?utm_source=substack&utm_medium=email

| AGI |

Durt, C., Fuchs, T. & Froese, T. (2023). Against AI Understanding and Sentience: Large Language Models, Meaning, and the Patterns of Human Language Use. |

http://philsci-archive.pitt.edu/21977/

| PREPRINT opinion (understanding) |

Gallagher, Brian (2023). Does GPT-4 Really Understand What We’re Saying. Nautilus. |

https://nautil.us/does-gpt-4-really-understand-what-were-saying-291034/

|

interview with David Krakauer

|

Gilson (2023). Are A.I. Text Generators Thinking Like Humans — Or Just Very Good at Convincing Us They Are. Stanford Graduate School of Business. |

https://www.gsb.stanford.edu/insights/are-ai-text-generators-thinking-humans-or-just-very-good-convincing-us-they-are

|

opinion (humanlikeless & convincing)

|

Guardian editorial (10 Feb 2023). The Guardian view on ChatGPT search: exploiting wishful thinking. The Guardian. |

https://www.theguardian.com/commentisfree/2023/feb/10/the-guardian-view-on-chatgpt-search-exploiting-wishful-thinking?CMP=share_btn_link

|

reliability

|

Heaven, Will Douglas (2023).The inside story of how ChatGPT was built from the people who made it. MIT Technology Review. |

opinion | |

Huang, K. (2023). Alarmed by A.I. chatbots, universities start revamping how they teach. The New York Times. |

https://www.nytimes.com/2023/01/16/technology/chatgpt-artificial-intelligence-universities.html

|

detection (by humans)

|

Kirchenbauer, J., Geiping, J., Wen, Y., Katz, J., Miers, I., & Goldstein, T. (2023). A Watermark for Large Language Models. |

https://doi.org/10.48550/arXiv.2301.10226

|

detection (watermark)

|

Lionbridge (2023). What ChatGPT gets right and wrong and why it’s probably a game-changer for the localization industry. |

https://www.lionbridge.com/content/dam/lionbridge/pages/whitepapers/whitepaper-what-chatgpt-gets-right-and-wrong/chatgpt-whitepaper-english.pdf

|

opinion (performance)

|

Liu, N.F., Zhang, T., & Liang, P. (2023). Evaluating Verifiability in Generative Search Engines. |

PREPRINT | |

Mahowald, K., Ivanova, A. A., Blank, I. A., Kanwisher, N., Tenenbaum, J. B., & Fedorenko, E. (2023). Dissociating language and thought in large language models: a cognitive perspective. |

PREPRINT | |

Marcus, G., & Davis, E. (2023). Large language models like ChatGPT say the darnedest things. Blog post at The Road to AI We Can Trust (Jan 10). |

https://garymarcus.substack.com/p/large-language-models-like-chatgpt

|

risks

|

Marcus, G. (2023). Inside the Heart of ChatGPT’s Darkness. Blog post at The Road to AI We Can Trust (Feb 11) |

https://garymarcus.substack.com/p/inside-the-heart-of-chatgpts-darkness?utm_source=substack&utm_medium=email

|

risks

|

McCallum (2023). ChatGPT banned in Italy over privacy concerns. BBC News. |

https://www.bbc.com/news/technology-65139406

| regulation |

McQuillan, D. (2023). ChatGPT Is a Bullshit Generator Waging Class War. Vice. |

https://www.vice.com/en/article/akex34/chatgpt-is-a-bullshit-generator-waging-class-war

|

reliability (bullshit) |

Milmo (2023). Italy’s privacy watchdog bans ChatGPT over data breach concerns Artificial intelligence (AI). The Guardian. |

https://www.theguardian.com/technology/2023/mar/31/italy-privacy-watchdog-bans-chatgpt-over-data-breach-concerns

| regulation |

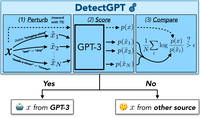

Mitchell, E., Lee, Y., Khazatsky, A., Manning, C.D., & Finn, C. (2023). DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature. |

detection (by machines) | |

Moran, Chris (2023). ChatGPT is making up fake Guardian articles. Here’s how we’re responding. The Guardian. |

https://www.theguardian.com/commentisfree/2023/apr/06/ai-chatgpt-guardian-technology-risks-fake-article?CMP=Share_iOSApp_Other

|

reliability (fake references)

|

Perrigo, B. (2023). Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic. The Times. |

https://time.com/6247678/openai-chatgpt-kenya-workers

|

ethical impact |

Rogers, A. (2023). The new Bing is acting all weird and creepy — but the human response is way scarier. Insider. |

https://www.businessinsider.com/weird-bing-chatbot-google-chatgpt-alive-conscious-sentient-ethics-2023-2

|

opinion (the human role)

|

Schaeffer, R., Miranda, B., & Koyejo, S. (2023). Are Emergent Abilities of Large Language Models a Mirage? |

PREPRINT | |

Schwitzgebel, E., Schwitzgebel, D., Strasser, A. (2023). Creating a Large Language Model of a Philosopher. |

PREPRINT | |

Shanahan, M. (2023). Talking About Large Language Models. |

PREPRINT | |

Smith, G. & Funk, J. (2023). A World Without Work Here We Go Again Mind Matters. |

https://mindmatters.ai/2023/04/a-world-without-work-here-we-go-again

|

risks (labor) |

Srivastava, A. et al. (2022). Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models. |

PREPRINT | |

Strasser, Anna (2023). On pitfalls (and advantages) of sophisticated Large Language Models. |

PREPRINT | |

Thorp, H. (2023). ChatGPT is fun, but not an author. Science, 379(6630), 313-313. | https://www.science.org/doi/10.1126/science.adg7879 |

opinion (authorship) |

Verma & Oremus (2023). What happens when ChatGPT lies about real people. The Washington Post. |

https://www.washingtonpost.com/technology/2023/04/05/chatgpt-lies/

|

reliability (biographical information) |

Walker (2023). Belgian man dies by suicide following exchanges with chatbot. Brussels Times. |

https://www.brusselstimes.com/430098/belgian-man-commits-suicide-following-exchanges-with-chatgpt

|

risks (toxic relationships) |

Whang (2023). Can Intelligence Be Separated From the Body? New York Times. |

https://www.nytimes.com/2023/04/11/science/artificial-intelligence-body-robots.html

| embodiement |

Woodcock, C. (2023). AI Is Tearing Wikipedia Apart. Vice. |

https://www.vice.com/en/article/v7bdba/ai-is-tearing-wikipedia-apart

| risks (truthworthiness) |

Wolfram, S. (2023). What Is ChatGPT Doing … and Why Does It Work. |

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work

|

TECHNICAL INTRODUCTION

|